Tailoring large language models for teaching relies on a valuable education expert: you.

56% of educators use AI sometimes, often, or always.

But only 25% of schools or districts have provided AI training to teachers.

These stats from our recent survey of 800 educators confirm what we already suspected: the majority of educators are using artificial intelligence for work. But most educators have not been given best practices to implement when relying on AI.

As a content engineer specializing in AI at Carnegie Learning, I’m passionate about helping educators learn about and use artificial intelligence. While most educators are familiar with large language models (LLMs) like ChatGPT, some remain skeptical of their usefulness, and others are limited in their understanding.

Whether you’re a current user of AI tools or are only just starting to learn what they can do for you, here is what you should know about large language models, how they work, and four ways to get the most out of them.

What are Large Language Models?

Large Language Models like ChatGPT, Claude, and Google Gemini are very sophisticated forms of autocomplete. They predict and then generate the next sequence of words based on the language you provide as input.

Let me explain it this way. How would you complete this sentence? What word or phrase comes first to mind?

"Pass the ___."

When I ask ChatGPT to complete the sentence with the most likely word, it comes up with "salt," which is what I would have said too.

But in different contexts, other words or phrases may be better, more likely endings to the sentence. If I first tell ChatGPT, "You are a member of Congress," the most likely word to complete the sentence changes from "salt" to "bill."

If I prompt ChatGPT with "Now you are in a school," the word "note" becomes the most likely ending. Personally, I disagree with this last choice and prefer "test" instead. Our "pass the salt" example highlights three key features of LLMs.

The autocomplete on your phone has learned language patterns, grammar, and vocabulary from a small set of available text data. Developers train large language models on thousands of times more data than your phone contains. They draw from websites, books, news articles, educational materials, scientific papers, conversations, and on and on. This is what puts the "large" in the large language model.

The second feature is prompting. Through commands and added context, you can redirect the LLM to autocomplete in a way that better suits your specific needs or situations. When I prompted ChatGPT with "Now you are in a school," I did not change the underlying model. I simply narrowed its focus on text patterns that are more likely to occur in school settings than elsewhere.

The third key feature is learning. As users interact with LLMs and provide feedback, these models gradually learn better ways of responding. This is a feature of the autocomplete on your phone as well (not on mine, though, I'm convinced).

The difference is that while your phone quickly learns your individual language patterns, LLMs learn more gradually and more carefully. LLMs learn from the inputs and feedback of millions of users over time.

The impact of LLMs in education

Let's be clear. Large language models are here to stay. They are not a fad. Educators worldwide are already hard at work using LLMs for their needs.

Educators are generating lesson plan outlines, quizzes, and reading passages. They are using LLMs to both evaluate and provide feedback on responses to open-ended questions. They are providing conversational practice, vocabulary training, and grammar exercises for language learning.

They generate parent letters, create writing prompts, complex problem-solving scenarios, and project ideas. They also personalize instruction, practice, and other content for individual students and their families. Many AI tools have been developed to meet these (and other) needs.

Leveraging teacher experience with LLMs

Although AI can help with many tasks, not all LLM outputs will be equally effective. The key to improving student learning with LLMs is the input of teacher expertise. We must remember that, for all of their power and sophistication, large language models are repositories of common wisdom–not evidence-based best practices.

In our recent webinar “AI in Education: What You Should Know,” my co-presenter and I offered specific tips on how to optimize your use of AI. Here are four additional tips to help you, as an education expert, put the most into and get the most out of LLMs.

You are! You are the expert. Don't treat LLMs like the web because they are most certainly not the web. Nor are they mind-reading services designed to give you exactly what you want with no effort on your end.

The outputs you get from LLMs are very much dependent on what you provide as expert input. So, try not to settle. It can be tempting to accept the first response you get when using an LLM. After all, it's designed to sound reasonable and (somewhat) coherent. But be aware that LLMs are still learning.

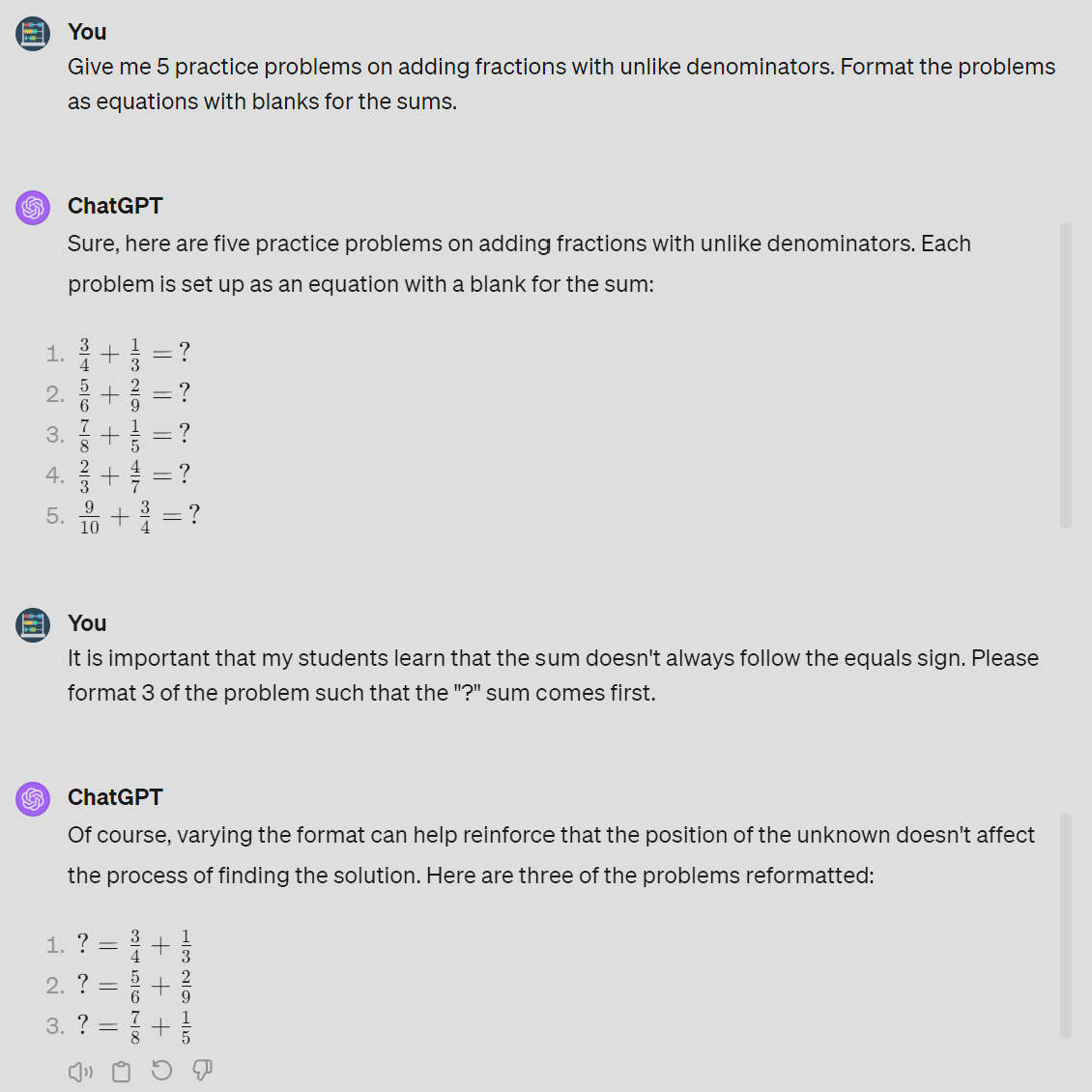

In this exchange with ChatGPT, I asked it to give me five practice problems on adding fractions with unlike denominators. The first response was not wrong, but not what I needed. As a result, I had to explain to ChatGPT that I wanted my students to learn that the sum doesn’t always follow the equals sign.

By giving it clear instructions that I wanted to format 3 of the provided problems such that the sum would come first, ChatGPT was able to rewrite problems to fit my needs. The end result is a few problems the illustrate the idea that the position of the unknown value does not affect the process of finding the solution.

So, for any task you want to give an LLM, think hard about how YOU would do it first and work to make the outputs close to what you, the expert, want.

For any task you want an LLM to do, imagine pulling a random person off the street and explaining the task to them. This will naturally make you more detailed than you otherwise would be. And that's what LLMs need: specificity.

Every day, billions of people across the globe create sentences that have never been uttered before. This is how immensely vast the space of language is. Large language models, no matter how big, can only capture a part of that space.

So, you have to be specific. Conversing with LLMs can be both rewarding and frustrating because it requires you to carefully consider the precise meaning and phrasing of your statements. Giving examples of how you would complete a task is a great way to maximize quality. This can produce results that are sensitive to your own style and context.

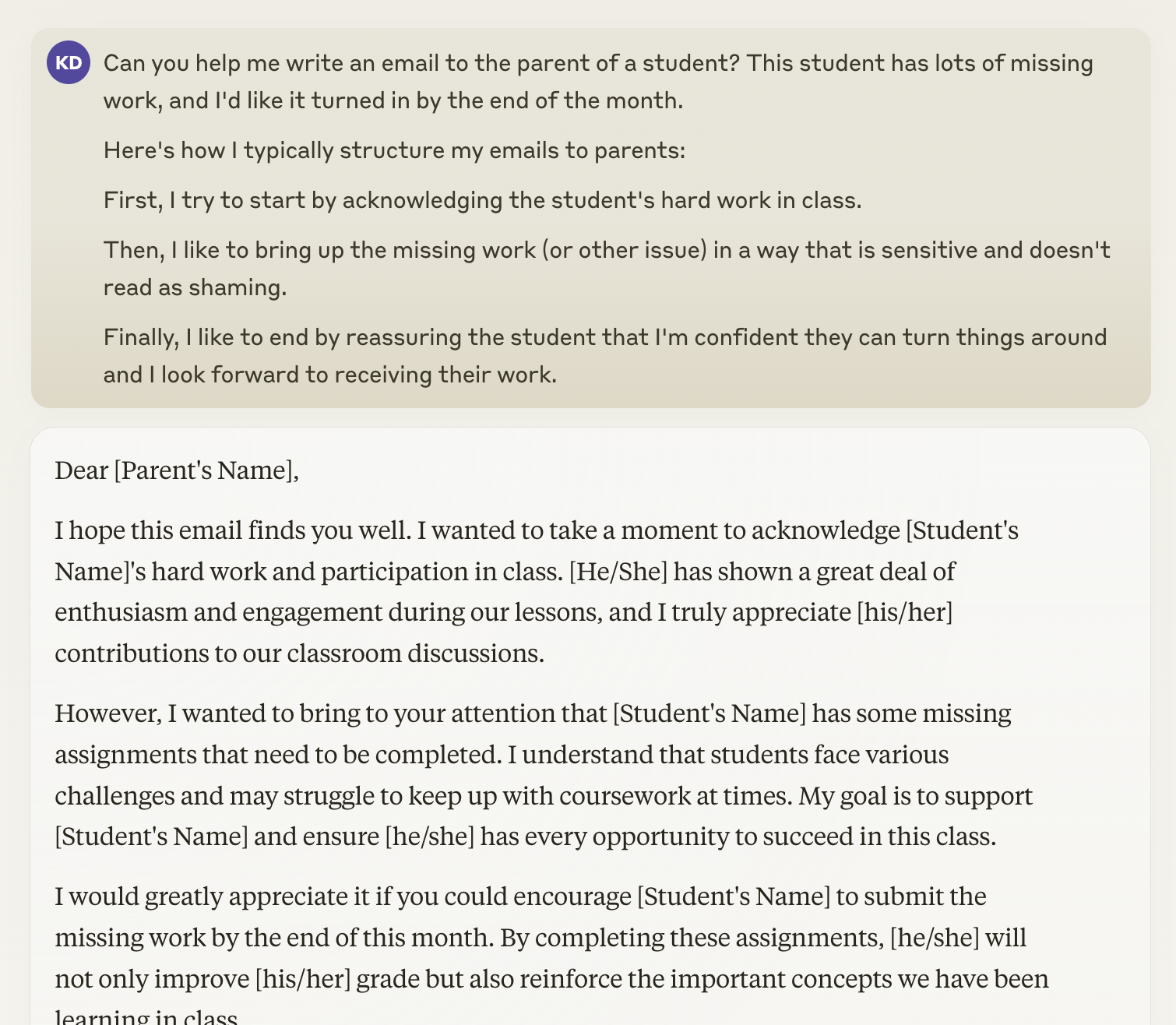

In the following example, my colleague asked another AI assistant, Claude, to help write an email to the parent of a student with missing work. By telling Claude how she typically structures her parent emails, the LLM can craft copy that matches the structure of others emails she may have sent. By adding details such as “bring up the issue in a way that is sensitive and doesn’t read as shaming,” she can direct Claude to help strike the right tone for the email.

Teachers are natural pros at this. Can't seem to get the LLM to do what you want? Well, try breaking up a complex task into parts or emphasizing to the LLM that this part of the task is "important."

When you adjust your prompts, try first to amend them either at the beginning or the end. If it is a long prompt, LLMs tend to "forget" on occasion what is in the middle (just like humans).

Think about your own context and use more precise words or examples to teach the LLM what it is you expect. Above all, be persistent.

If you know how to complete the task, chances are good that there is some language that can make an LLM do the same or similar. All you have to do is find that language. And that often requires some creative thinking.

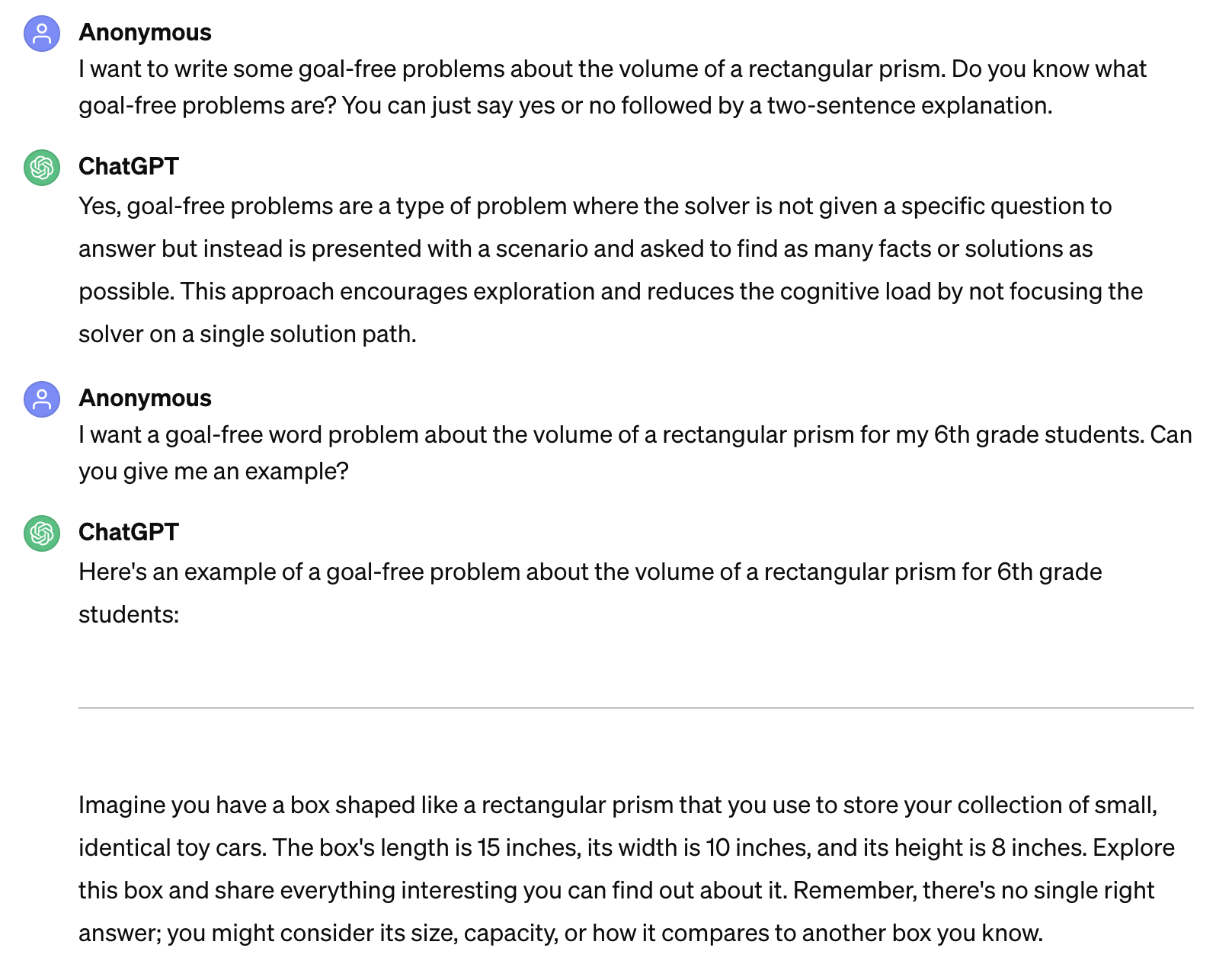

In the following example, I’ve asked ChatGPT to write goal-free problems about the value of a rectangular prism. However, instead of providing a simple, one-sentence instruction, I’ve included a question asking ChatGPT if it knows what goal-free problems are. By adding the phrase “You can just say yes or no followed by a two-sentence explanation,” I can ensure that the LLM and I have a shared understanding of the task before drafting appropriate word problems.

When I'm working with ChatGPT to do something complex, I will very often start with something less specific than I want. I use the conversation to refine what I'm looking for. If I get good results at the end, I will ask GPT to write a prompt, based on the conversation. This prompt will get me the results I want in one fell swoop.

That certainly doesn't always work, but it is very informative as to how I can structure my requests. Don't forget that you can use LLMs to not only formulate responses but also to work with you on the right instructions.

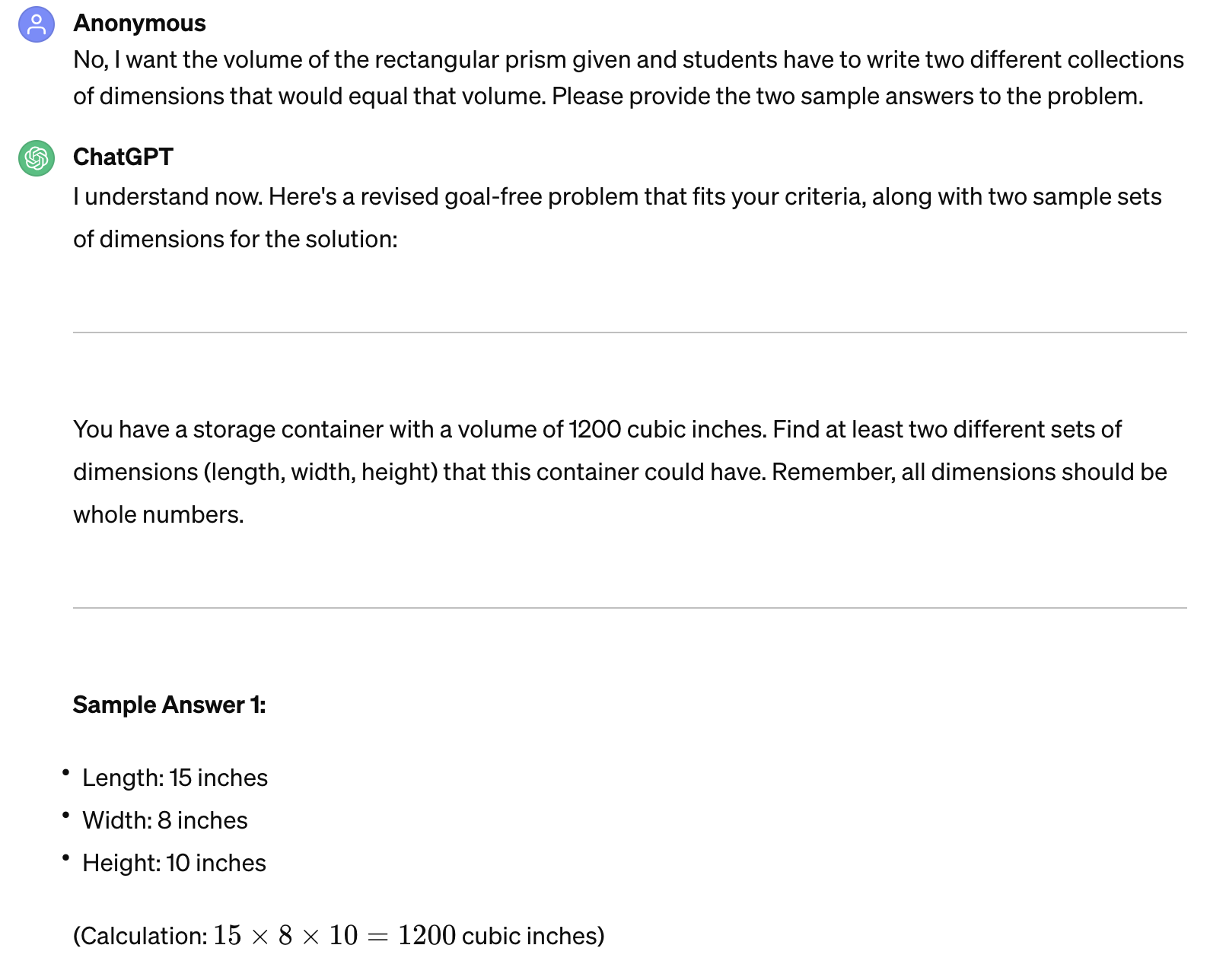

The following example is a continuation of the previous request for a goal-free word problem. I’ve indicated to ChatGPT that I am not satisfied with its initial suggestion by stating that I want students to have to write two different collections of dimensions equal to a given volume of a rectangular prism. I have also asked for sample answers.

The importance of teacher knowledge

Teaching is an incredibly complex and nuanced profession that is difficult to fully understand for those who have not experienced it firsthand. This is the hidden knowledge that large language models—and common wisdom—lack. We have to teach AI this knowledge if we want it to be useful and impactful in education.

All we have to do is continue teaching.

Josh Fisher is the AI Content Engineer for Carnegie Learning and a mathematics instructional designer with over 27 years in K-12 mathematics, co-author of Carnegie Learning's MATHbook series. He has contributed significantly to Carnegie Learning's unique digital products as well, leading content-creation efforts for both MATHstream and MATHia. Josh spearheaded the creation of LiveHint in 2020 and is now leading the development of its successor, LiveHint AI. Committed to innovation in 21st-century educational products, Josh's work embodies a passion for utilizing instructional design and AI to democratize and enhance learning in mathematics.

Explore more related to this authorThe key to improving student learning with LLMs is the input of teacher expertise. [...] for all of their power and sophistication, large language models are repositories of common wisdom–not evidence-based best practices.

Josh Fisher